What are LLMs and how can they help you do research?

Learn what Large Language Models (LLMs) are and how they can energize your PhD research. Discover how AI can help you manage hundreds of papers and uncover novel connections in your field.

How can AI for Research ease your PhD Journey?

If you're deep into your PhD journey, you've probably found yourself drowning in a sea of papers. Hundreds of PDFs scattered across folders, bookmarks that lead nowhere, and that nagging feeling that somewhere in your digital library lies the perfect connection that could elevate your research—if only you could find it.

You likely started your academic career because you were passionate about discovery, about pushing the boundaries of human knowledge in your field. Yet here you are, spending more time managing papers than actually doing the research you love.

Let's be honest, the manual process is grueling. You spend countless hours organizing PDFs, scribbling notes in the margins, and trying to recall where you saw that one crucial paragraph. It feels like you need a second brain just to manage your primary one. This is where Large Language Models (LLMs) come in.

What Exactly Are Large Language Models (LLMs)?

Large Language Models (LLMs) are AI systems trained on vast amounts of text from across the internet, books, and academic literature. Think of them as incredibly well-read research assistants who have absorbed knowledge from hundreds of disciplines—from quantum physics to medieval literature, from computational biology to social psychology.

Unlike traditional search engines that match keywords, LLMs understand context, nuance, and the subtle connections between ideas. They can recognize that a paper about network theory in sociology might be relevant to your computer science research on distributed systems, or that techniques from cognitive psychology could inform your work in human-computer interaction.

The Transformer Architecture: A Quick Look

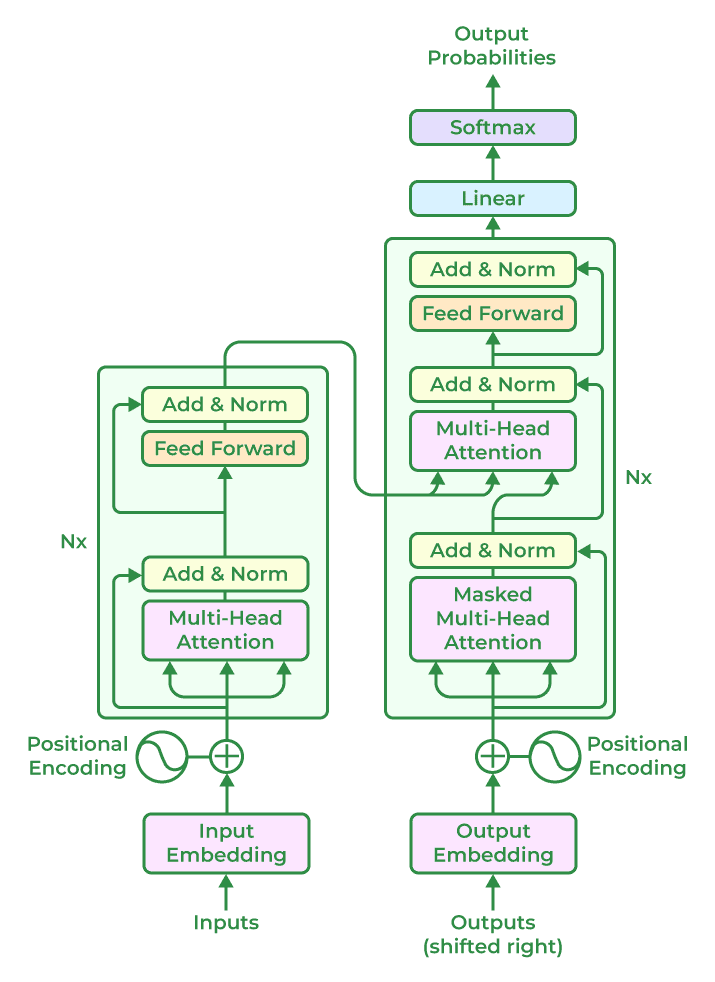

The diagram above illustrates a "transformer-based" architecture, which is the foundation for most modern LLMs. At its core, this architecture is designed to handle sequential data, like text, by using a mechanism called "self-attention." This allows the model to weigh the importance of different words in the input when processing and generating language.

Here’s a simplified breakdown of what’s happening:

-

Input & Positional Encoding: The model takes in your text and adds positional information to each word. This is crucial because, unlike humans, the model doesn't inherently know the order of words.

-

Multi-Head Attention: This is the powerhouse of the transformer. It allows the model to look at the input from different perspectives simultaneously. Each "head" focuses on different relationships between words, capturing various nuances in the text.

-

Feed-Forward Networks: After the attention mechanism does its job, the information is passed through a feed-forward neural network, which further processes the data and helps the model learn more complex patterns.

-

Softmax & Output: Finally, the softmax function converts the model's final scores into probabilities, which are then used to predict the next word or generate the final output.

In essence, the transformer architecture enables LLMs to understand long-range dependencies in text, making them incredibly effective at tasks like translation, summarization, and question-answering.

The Research Management Nightmare

Okay so, what kind of pressures are you facing as a researcher?

The Information Overload: Your research folder contains 347 papers. You've read abstracts for most, fully digested maybe 50, and you have a vague memory of "that paper about X" that you can never quite locate when you need it.

The Connection Problem: You suspect there are brilliant insights hiding in the intersections between your field and others, but you don't have time to read everything in adjacent disciplines, let alone identify those crucial connections.

The Context Switching: Every time you dive into literature review mode, you lose momentum on your actual research. You spend hours following citation trails that lead to dead ends, or worse, rabbit holes that consume entire days.

The Publication Pressure: With the pressure to publish, you need to ensure your work is thoroughly grounded in existing literature, but comprehensive literature reviews feel like full-time jobs in themselves.

How LLMs Can Transform Your Research Process

Here's where LLMs become game-changers for researchers. Because they've been trained across disciplines, they excel at:

Cross-Disciplinary Discovery: An LLM might notice that your machine learning approach shares fundamental principles with a technique used in neuroscience research, opening up entirely new avenues for your work.

Pattern Recognition: They can identify recurring themes, methodologies, or findings across your corpus of papers that might not be immediately obvious when you're reading them individually.

Intelligent Summarization: Instead of re-reading entire papers to remember their key contributions, you can get contextual summaries that highlight exactly what's relevant to your current question.

Hypothesis Generation: By understanding the gaps and patterns in your research area, LLMs can help suggest novel research directions or identify under-explored connections.

The Open Paper Advantage: Staying Grounded in Your Research

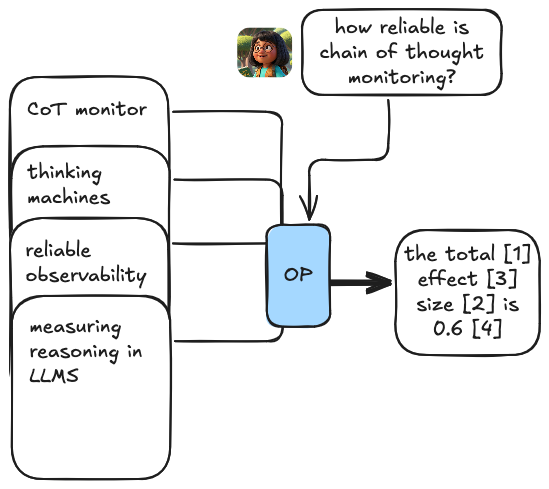

There is a lot of well-deserved skepticism about LLMs amongst researchers, especially when it comes to their reliability and accuracy. After all, they are trained on a vast corpus of text that includes both high-quality research and less reliable sources. This is why Open Paper takes a different approach.

While LLMs are powerful, they're not perfect. Generic AI assistants can sometimes "hallucinate"—generating plausible-sounding but incorrect information. This is where Open Paper makes the crucial difference.

Instead of relying on the AI's general training, Open Paper keeps you anchored in your actual corpus of research. When you ask questions or seek connections, the system works within the context of your specific papers. This means:

- No Hallucinations: Every insight, connection, or summary is grounded in papers you've actually collected

- Source Transparency: You can always trace back to see exactly which papers informed any AI-generated insight

- Contextual Intelligence: The AI understands not just individual papers, but how they relate to each other within your specific research domain

Reclaiming Your Time for What Matters

Remember why you started this journey. You became a researcher because you wanted to push boundaries, ask important questions, and contribute something meaningful to human knowledge. You didn't sign up to become a babysitter of documents.

By leveraging AI for research in the right places—for literature organization, connection discovery, and research management—you can spend more time on the creative, analytical work that drew you to academia in the first place. Instead of spending your Saturday morning trying to remember which paper discussed that specific methodology, you can focus on designing experiments, developing theories, and writing compelling arguments.

Open Paper isn't about replacing your expertise or critical thinking. It's about amplifying your capabilities and removing the friction that keeps you from doing your best work. It's about turning your vast collection of papers from a burden into a superpower.

The Path Forward

The landscape of academic research is evolving, and the researchers who thrive will be those who effectively combine human insight with AI capabilities. LLMs offer unprecedented opportunities to synthesize knowledge across disciplines and manage the growing complexity of academic literature.

It might feel like you're 'surviving' your way through your PhD, rather than making novel, interesting discoveries. With the right tools helping you navigate the information landscape, you can spend less time on digital paper shuffling and more time on the breakthrough insights that will define your career.

Your research deserves your full attention. Let AI for research handle the rest.